This is the foundation page for the topic of Verification.

The Standard Caution About Abstractions applies to this page.

Introduction

As a System Engineering abstraction, verification is the process of establishing objective evidence. We use the evidence to substantiate claims about parameter values being within some particular range.

Without a related claim the processes are mere research (e.g., Development Testing). Research can accidentally evolve into a science project so, unfortunately, these activities must be watched closely on any Development project.

Objectivity is a crucial aspect of verification for the usual reasons: the complete provenance is available, so that results can be independently repeated. Objectivity also supports the bounding of legal liabilities with respect to the product.

The types of “claims” subject to verification include (but are not limited to) the following:

– Compliance with a development requirement

– Compliance with a manufacturing requirement

– A particular level of performance under a specific set of circumstances1

– Weight less than some given amount

– Incorporation of a certain Part Number as an internal component

– Performance margin sufficient to meet a regulatory requirement

In the present context, the words “establishing” and “provenance” can entail significant formality. The means of establishment can be thought of as a conceptual spectrum ranging from (on the one hand) the mere formal designation of existing evidence2 to (on the other hand) the creation of new data3. Most real-world projects fall somewhere between the two extremes.

Aside

In theory, development verification costs might could be minimized by restricting a development project’s design to rely on existing, readily accepted verification data. This could be accomplished by writing explicit requirements for the design and construction of the developmental item, and by writing explicit verification requirements to use existing data.

Constraints of that type would (however) create a significant internal conflict between the desire to unleash the innovative dogs of the design team (on the one hand) and the need to contain costs associated with dedicated verification tasks (on the other hand). From a System Engineering process perspective, such constraints are “sub-optimizations”, which are usually a bad idea4.

In the hardware world, the issue of manufacturing (both feasibility and cost) makes cost minimization a three-cornered battle. Resolution of conflicts between these perspectives is (or should be) a major focus of Preliminary Design (PDR): the balance between things like “performance”, “verifiability” and “producibility” are part of PDR’s “feasibility” assessment.

It is easy to misinterpret this discussion as being applicable to the development process only. It is important to recall that formal, legally binding verification also applies when an existing design is being qualified against requirements that were not imposed on the original developmental effort for that design. See the intermediate discussion about CI Development for several different situations in which this might occur.

Verification “Methods”

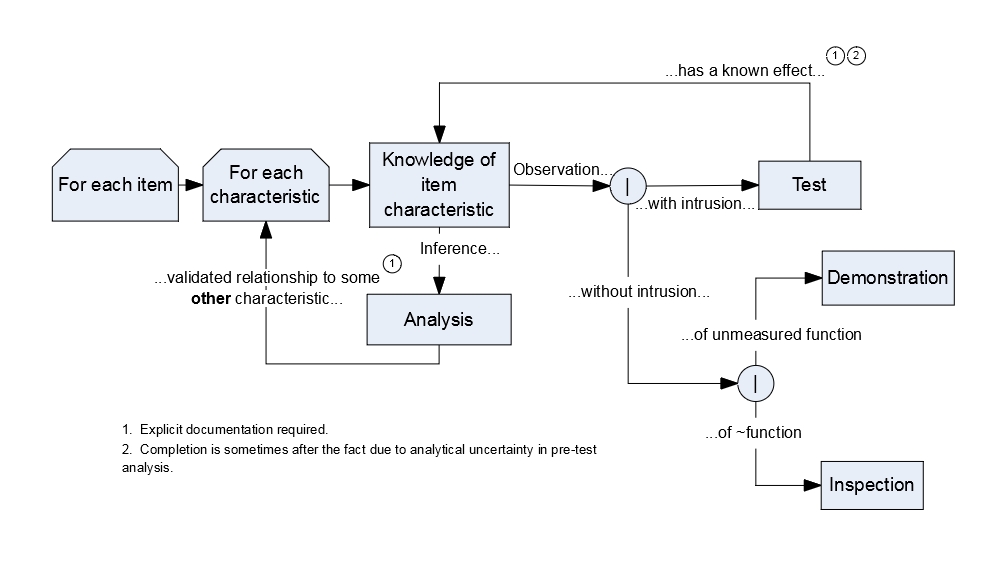

As a crude generalization, we acquire evidentiary knowledge5 by one of two basic means: observation or inference6. Observations either intrude or do not; those that do not intrude observe things that can either be quantified7 or are merely “existential”8. We can use these concepts to differentiate between four basic approaches to verification, as shown in Figure 1, derived from my version of the classic definitions. The four basic approaches are usually referred to as “Verification Methods“.

It will9 be noted that the concept of “observability” is central to this perspective of verification: we can only verify things if we can somehow see what’s going on. If we cannot observe a characteristic10 then we cannot obtain information to substantiate any claims. This is also true with regard to claims about all characteristics, including requirements11.

It is critically important to observe12 that Figure 1 makes no allowance for assumptions, guessing, omphaloskepsis, or any processes that cannot be categorized as one of the four I’ve mentioned above13. That disallowance is “with malice14 aforethought”, because none are admissible. Unfortunately, many others have been tried.

The observability of characteristics about which claims are15 made is a critical follow-on from Topical Parameterization which is, itself, a core concept in System Engineering. We often keep rummaging through candidate parameterizations16 until we understand the observability of their associated characteristics and, by extension, have a reasonable perspective on how related claims can be substantiated by objective evidence. This is particularly true when we’re generating development requirements, and is the primary reason that such requirements are not finalized17 until the preliminary design has been agreed on: fundamental characteristics of the design can strongly influence the observability of the parameters about which design-to requirements are written, and we need to formally identify and constrain the design approach with regard to them.

Aside

There has long been a strongly prevalent notion among the general SE community that requirements at all levels of system indenture should be completely free of design constraints. It will be noticed (correctly) that the immediately preceding paragraph defies certain aspects of that idea.

In defense of that defiance by way of inductive counter-example, it is not uncommon to decide that a component might be electrically powered…which is clearly a constraint on the detailed design of that component, and is usually allocated as a development requirement. The same is true for an early decision to control an item digitally instead of by analog means.

To be blunt…yes, we do write requirements that constrain the design approach…and the more explicit we are about what constraints we really do need or intend, the better off we’ll be.

Of course, Figure 1 and the preceding discussion over-simplify the practices associated with this issue. They’re intended to introduce the philosophical concepts involved. The figure only reveals the fundamental character of abstract verification methods, and is not intended to be be rigidly executed under any and all circumstances. In the real world, there are subtleties involved, with many nuances of implementation from one technical field to another and differences of standard nomenclature within the SE community18.

Aside

In my experience, test and analysis are often considered to be mutually exclusive, alternative approaches for the verification of any given claim. It is far more effective to consider them as complementary: analysis can only consider those aspects of the design known to the analyst19; on the other hand, we often cannot test the entire range of conditions20. A single claim might (therefore) be verified by a sequence or combination of methods rather than a single method.

By extension, a single claim might be verified by a network of methods, some of which might proceed in parallel based on a single set of inputs21. This concept is sometimes referred to as “method stacking”. Some authorities severely deprecate it, usually with the intention to replace it by a single “verify by analysis”. When executed (however) that intention often results in verification requirements communicating no relevant, useful technical direction.

Aside (Regarding The Scientific Method)

Returning to the notion of “objective evidence”, we can also regard the SE concept of Verification as an implementation of the final stage of the Scientific Method22. No matter which Method is selected, any claim is considered to be unsubstantiated until verified otherwise. System Engineering admits to no presumption of compliance.

This is conceptually analogous to the idea of the “null hypothesis” in statistical testing: tests are run to show the existence of the effect, not to show the lack of one23. This should recall to the reader’s attention the loop covered in the Intermediate CI Development Cycle: absent verification of a compliant design, try again.

In that context (therefore) “I can’t see any reason this won’t work” is not a valid Verification Method. Our job as developers is to substantiate that a claim is met…not to challenge others to substantiate that it is not. It is not possible for me to communicate how many times I’ve seen this notion disputed, nor the vigor of that counter-argument24.

Footnotes- Which might, or might not, have been developed in response to a requirement.[↩]

- At the one extreme, where reliable evidence exists, new data do not have to be created.[↩]

- At the other extreme, where existing evidence is not available or is not readily accepted by both the developer and the customer, costly project-specific tasks are necessary.[↩]

- In effect, this type of constraint implies that the Engineers aren’t allowed to learn anything they didn’t already know.[↩]

- I use the phrase “evidentiary knowledge” specifically to side-step the argument that “belief” (including revealed belief) is a form of knowledge. Having experienced that debate on more than one occasion, I consider it to distract from the point I need to make.[↩]

- An argument could be made that book-learning is neither observation or inference. A counter-argument could be made that reading something in a book is transmittal of the evidentiary knowledge rather than acquisition.[↩]

- Strictly speaking, the value of the parameter being observed can be identified as a region (a subset) of a defined set…but I try not to wax TOO pedantic![↩]

- That is, either they’re present, or they’re not. An argument could be made that this is just the difference between “quantity = 0” and “quantity = 1”. A counter-argument could be made that the issue is really a matter of having (or not having) a particular dimension in the vector of attributes (which is itself a set), and that we’d be silly to keep track of all the attributes that are NOT applicable to a given item. For my part, I’d argue that the argument is silly, and then go do the simple thing.[↩]

- I hope![↩]

- or infer its value from some other characteristic with a relevant relationship that has been or can be observed[↩]

- Because we will, eventually, try to claim compliance with each requirement.[↩]

- See what I did, there?[↩]

- Some authorities deny that “simulation” is a form of analysis, so add it as a fifth method. The definition of “analysis” used here is, however, adequate to cover that concept. Therefore, I don’t make it an explicit option.[↩]

- And prejudice[↩]

- or will be[↩]

- Even though we don’t always realize that’s what we’re doing![↩]

- At least, in the legacy practices.[↩]

- Not to mention internal inconsistencies and outright confuscations.[↩]

- anything not known is…unknown…but the hardware tells no lies.[↩]

- or don’t want to pay for it[↩]

- We might, for example, test the sensitivity of some baseline functionality, using that test to anchor two different types of analysis. Or, do a single analysis that feeds two or more different tests under different environmental regimes.[↩]

- That is, wherein we attempt to confirm an hypothesis.[↩]

- an “effect” is never assumed to be present before hand.[↩]

- Desperate people can be remarkably energetic.[↩]